Vector Implementation - 1

Brainstorming

Let's try to think about this problem logically. Of course, the problem is that we don't know the number of elements to be pushed in the array beforehand; how do we design a data structure to tackle this problem and let the user push/pop dynamically?

Come on, put on your thinking hat and try to think about a few possible solutions yourself before reading on.

Possibility 1

Just use a really large array! (modern problems -> modern solutions xD)

I mean, if I don't exactly know the number of guests coming out of the 10 people I invited, I'd do an arrangement of meals for 10 people and be on the safe side, right?

Anyway, this approach has a few cons:

- How to define really large?

- If you're able to define really large, then there is memory wastage.

- Even then, the array is not really dynamic (it's pseudo-dynamic); It's not like the users are pushing new elements or popping them.

Possibility 2

What if we create an array of size, let's say, 10 initially? Now, till the first 10 elements, we're fine, but as soon as the 11th element comes in, we'll have to do something; what could that something be?

A possible approach is to create a new array of size 20, copy the first 10 elements from the old array, and then insert the 11th element.

What about the 21st element now? Again, we could make another array of size 30, and do the same as above.

The problem is the time complexity. If we keep on pushing back elements, every 10th push_back will be of O(N), which basically means that the overall time taken to push N elements is approximately (N/10)*N.

If you notice, option 1 will have a nice time complexity, but that's because we're wasting space, and in option 2, not a lot of space is wasted but time complexity for push_back is coming out to be O(N), which is unacceptable.

What we're looking for is the best of both, where space is dynamically allocated as and when required, and the time for push/pop is also as close to O(1) as possible.

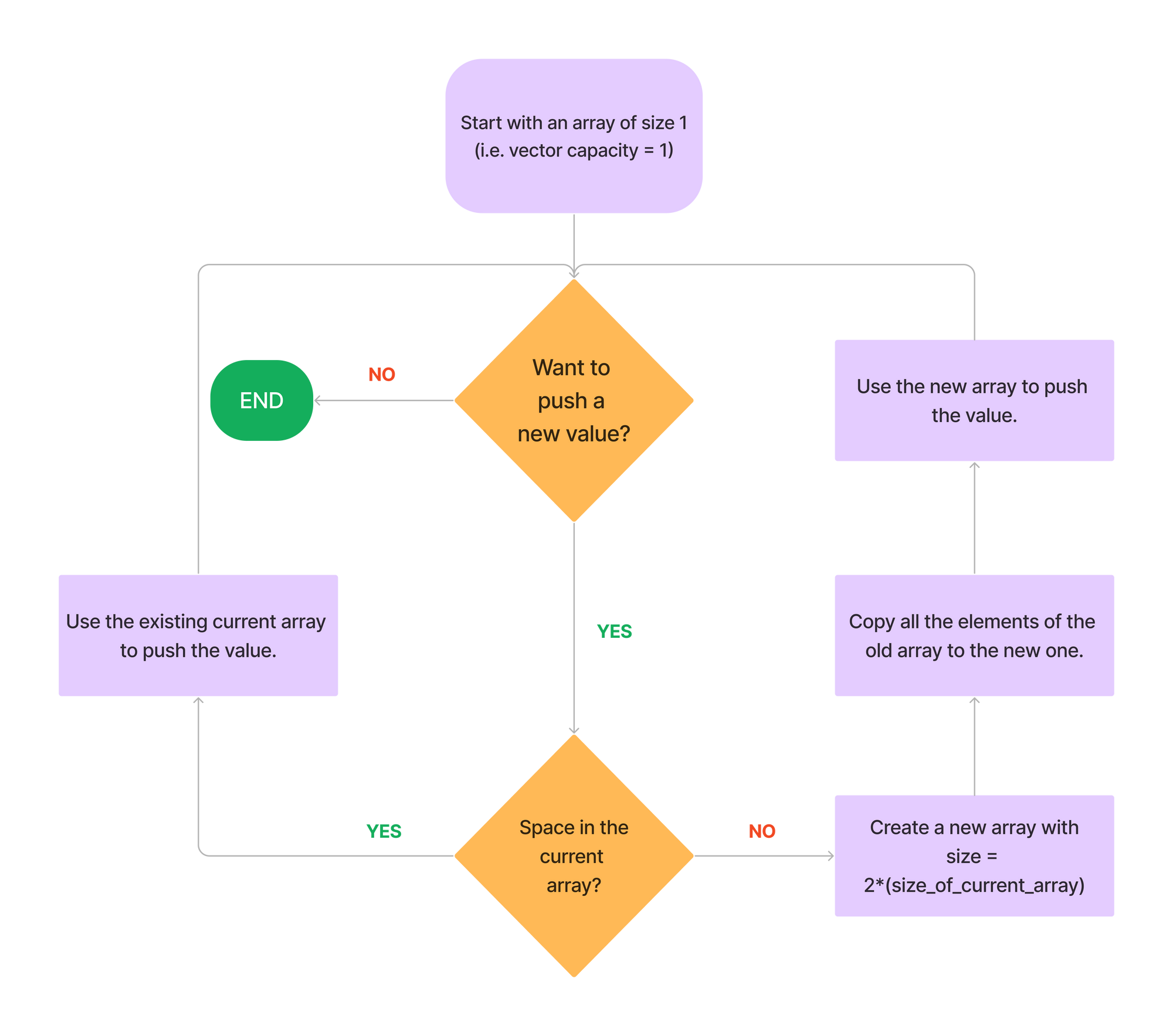

Finalizing the approach for push_back

Okay, let's try to improve on possibility 2 and finalize an approach that works:

- Start with an array of size 1. (i.e.capacity of vector = 1)

-

If, at any time, the size of the vector tends to become greater than its capacity:

- Create a new array having a size equal to twice the current capacity of the vector.

- Copy all the elements from the current array to this newly created array.

- De-allocate the memory of the old array and start pushing the new elements to the new array from now onwards.

- Otherwise, just use the empty space in the current array to add more elements to the end.

I hope the approach is at least 40% clear by now; the rest 60% will be done after the next couple of sections.

Also, if you're thinking about the time complexity, good, keep thinking.

Lot of talking already; let's implement it now

Boilerplate Code for the vector class

class vec {

int *arr;

int cur_size, cur_cap;

public:

// constructing with an array of size 1.

vec() {

arr = new int[1];

cur_size = 0;

cur_cap = 1;

}

};We've already discussed the logic in the above section, all that's left now is to convert English to code.

void push_back(int num) {

// If there's more space

if(cur_size < cur_cap)

arr[cur_size] = num, cur_size++;

else {

int *new_arr = new int[2*cur_cap]; // double the capacity.

for(int i = 0; i < cur_size; ++i)

new_arr[i] = arr[i];

delete[] arr; // de-allocate the old memory.

arr = new_arr; // Start using the newly created array.

cur_cap *= 2;

arr[cur_size] = num;

cur_size++;

}

}After this, I added 2-3 more straightforward functions to the class and tried it out.

Testing push_back

#include<bits/stdc++.h>

using namespace std;

class vec {

int *arr;

int cur_size, cur_cap;

public:

// constructing with an array of size 1.

vec() {

arr = new int[1];

cur_size = 0;

cur_cap = 1;

}

void push_back(int num) {

// If there's more space, then easy peasy

if(cur_size < cur_cap)

arr[cur_size] = num, cur_size++;

else { // some things need to be done

int *new_arr = new int[2*cur_cap]; // double the capacity.

// copy the elements from old to new.

for(int i = 0; i < cur_size; ++i)

new_arr[i] = arr[i];

delete[] arr; // de-allocate the old memory.

arr = new_arr; // Start using the newly created array.

cur_cap *= 2;

arr[cur_size] = num;

cur_size++;

}

}

void print_all() {

for(int i = 0; i < cur_size; ++i)

cout << arr[i] << ' ';

cout << '\n';

}

int size() {

return cur_size;

}

int capacity() {

return cur_cap;

}

};

int main()

{

vec v;

// Pushing 3 values.

v.push_back(1);

v.push_back(21);

v.push_back(3);

v.print_all();

cout << "Size: " << v.size() << ", Capacity: " << v.capacity() << "\n\n";

// Pushing 3 more values

v.push_back(12);

v.push_back(5);

v.push_back(2);

v.print_all();

cout << "Size: " << v.size() << ", Capacity: " << v.capacity() << "\n\n";

// Pushing 2 more values

v.push_back(4);

v.push_back(54);

v.print_all();

cout << "Size: " << v.size() << ", Capacity: " << v.capacity() << "\n\n";

// Pushing 1 more value (last time)

v.push_back(21);

v.print_all();

cout << "Size: " << v.size() << ", Capacity: " << v.capacity() << '\n';

return 0;

}Output (correct, as expected 😎)

1 21 3

Size: 3, Capacity: 4

1 21 3 12 5 2

Size: 6, Capacity: 8

1 21 3 12 5 2 4 54

Size: 8, Capacity: 8

1 21 3 12 5 2 4 54 21

Size: 9, Capacity: 16

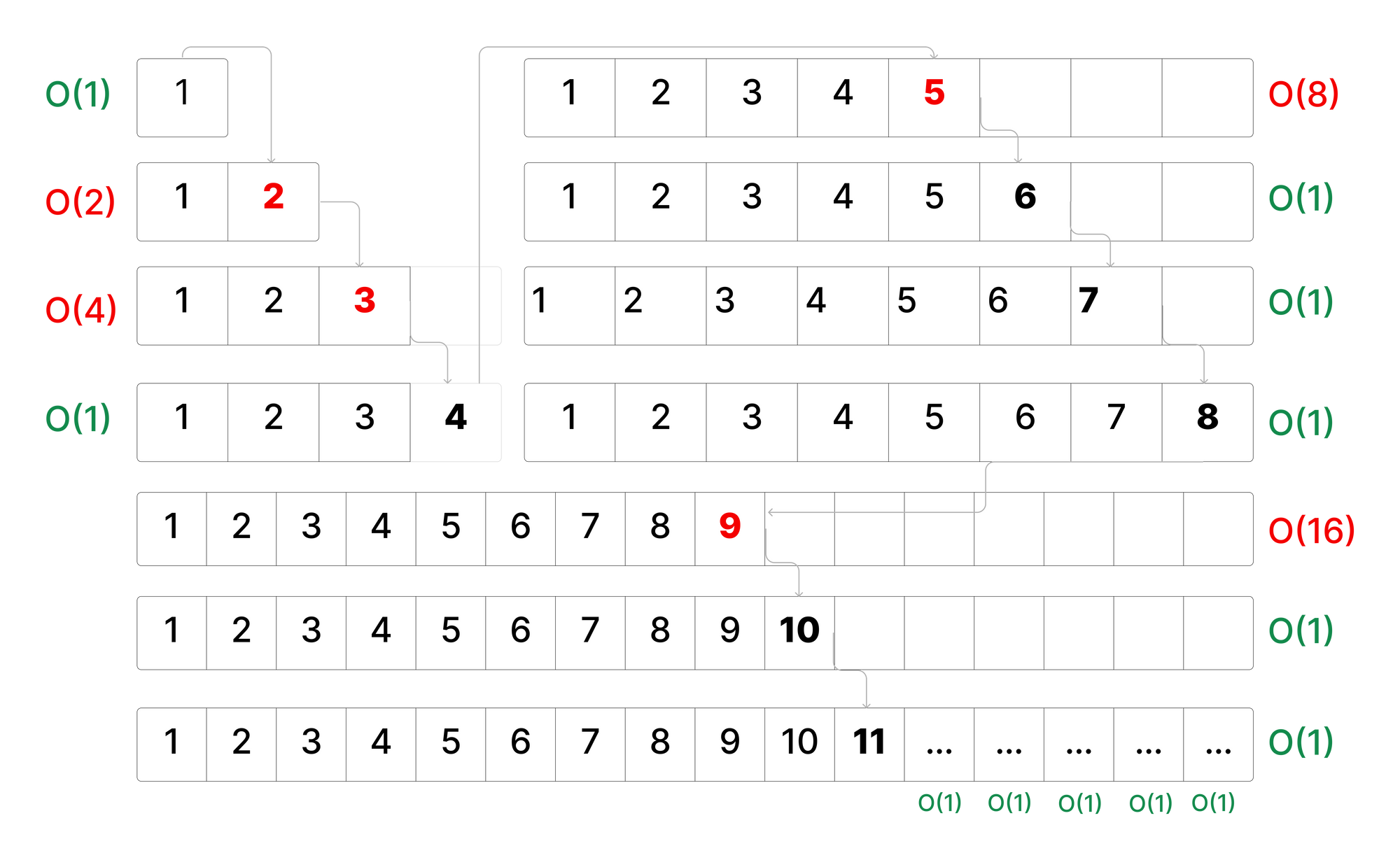

Time Complexity Analysis

If we notice, what's happening is that most of the time, the time complexity to push_back an element is O(1) (when the capacity is greater than size), but sometimes it's O(N) as well. (when the array needs to be resized to 2*capacity)

Well, contrary to the popular idiom, the devil actually lies in the zoomed-out picture here.

What I mean is, instead of looking at the individual time complexities, let's analyse how much time will be taken in totality if N push_back operations are done back-to-back.

Noticing even further, the number of operations required are:

- ~2k+1 operations, whenever n = 2k + 1

- just ~1 operation, otherwise

Another observation is that if we look for N push_backs, pt. 1 will be applicable approximately Log2N times, and all the other times, pt. 2 will be applicable.

Ops = \((\underbrace{2^1 + 2^2 + 2^3 + 2^4 + 2^5 + ...}_{\text{No. of terms =}~Log_2N})\) + (\(\underbrace{1 + 1 + 1 + 1 + 1 + ...}_{\text{Approximately N terms}}\))

Solving both the subparts:

Ops = 2\(\mathit{*}(2^{log_2N} - 1)\) + N

Finally, Ops = 3*N - 2 Therefore, Time Taken = ~3*N

Now, since it's seen above that the time complexity for N push_back operations is O(N), it means that in the bigger picture, the push_back operation is still O(1).

In this unusual case, the push_back operation has an amortized time complexity of O(1).

In layman's terms, the amortized time complexity is the expense per operation, when evaluated over a sequence of operations.

What's next?

This was just the push_back function, let's implement a few more functions like:

- pop_back()

- back()

- [] operator

Don't worry, none of these functions are anywhere as complex as the push_back function.

Also, I will share 1 other thing in the next article. (only if I feel like sharing xD)